Boosting AI: The Quiet Power of Quantization

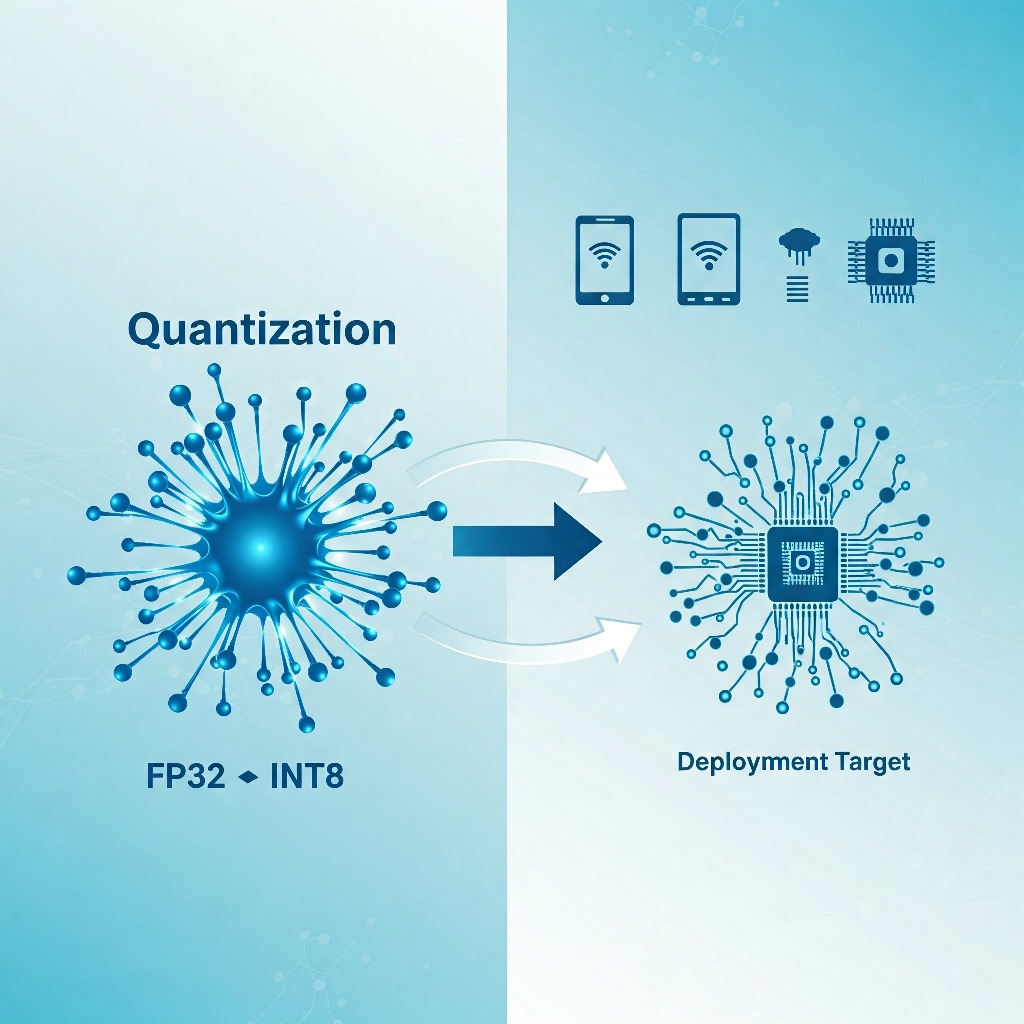

In the rapidly evolving landscape of artificial intelligence, the proliferation of increasingly complex deep learning models presents a significant dichotomy. While these sophisticated architectures achieve state-of-the-art performance across diverse tasks, their computational and memory demands often render them impractical for deployment in resource-constrained environments. This challenge necessitates advanced optimization strategies, among which AI model quantization emerges as a pivotal technique. At its core, quantization involves converting the high-precision floating-point parameters (typically 32-bit, FP32) and, potentially, activations within a neural network into lower-precision numerical formats, such as 8-bit integers (INT8), 4-bit integers (INT4), or even binary representations. This reduction in numerical precision yields substantial benefits in model size, inference latency, and power consumption, thereby enabling the deployment of powerful AI capabilities on edge devices, mobile platforms, and latency-sensitive cloud applications.

Why Quantize? The Driving Forces Behind Model Optimization

The motivations underpinning the adoption of AI model quantization are multifaceted and compelling. Primarily, it addresses the critical need for operational efficiency:

- Reduced Model Size: Lower-precision representations require significantly less storage space. Converting FP32 parameters to INT8, for instance, theoretically reduces model size by approximately 75%. This is crucial for over-the-air updates and deployment on devices with limited storage capacity.

- Accelerated Inference Speed: Integer arithmetic operations are computationally less expensive and can be executed much faster than floating-point operations on many modern hardware architectures, including CPUs, GPUs, and specialized accelerators like TPUs and NPUs. This translates directly to lower latency and higher throughput during inference.

- Lower Power Consumption: Reduced computational complexity and memory access frequency lead to decreased energy usage. This benefit is paramount for battery-powered devices (e.g., smartphones, IoT sensors, wearables) and for reducing the operational costs and environmental footprint of large-scale data centers.

- Enhanced Accessibility: By mitigating the hardware requirements, quantization democratizes access to advanced AI models, allowing them to run effectively on a broader range of consumer and industrial hardware.

- Improved Memory Bandwidth Utilization: Lower bit-width data requires less memory bandwidth for transfer between memory and processing units, alleviating a common bottleneck in deep learning computations.

Delving Deeper: The Mechanics of Quantization

The process of transforming a high-precision model into its lower-precision counterpart involves several intricate steps and considerations. It's not merely a truncation of bits but a carefully calibrated mapping process.

From Floating-Point to Fixed-Point: The Fundamental Shift

Neural networks are typically trained using 32-bit floating-point numbers (FP32), which offer a wide dynamic range and high precision, suitable for capturing the subtle gradients during backpropagation. Inference, however, often tolerates lower precision. Quantization maps these FP32 values to a lower-precision format, most commonly fixed-point integers (like INT8). This mapping requires defining the range [min, max] of the original FP32 values and mapping it onto the available integer range (e.g., [-128, 127] for signed INT8). The relationship is often defined by a scale factor (S) and a zero-point (Z):

real_value ≈ S * (quantized_value - Z)

Here, S determines the step size between quantized levels, and Z represents the integer value corresponding to the real value zero. Accurate determination of S and Z is critical for minimizing quantization error.

Mapping Strategies: Linear vs. Non-Linear Quantization

- Linear (Affine) Quantization: This is the most common approach, where FP32 values are uniformly mapped to integer levels. It's computationally simple but assumes a uniform distribution of values, which might not always hold true, potentially leading to larger errors for outliers or non-uniformly distributed tensors. Symmetric linear quantization sets Z=0, simplifying calculations further, especially if hardware supports it efficiently. Asymmetric linear quantization uses a non-zero Z to better fit potentially skewed distributions.

- Non-Linear Quantization: These methods use non-uniform steps between quantization levels, potentially clustering more precision around frequently occurring values (like zero) and using less precision for sparse outliers. Techniques like logarithmic quantization fall into this category. While potentially offering better accuracy for certain distributions, they often require more complex hardware support for efficient execution.

Calibration: Aligning Quantized Ranges

To determine the appropriate mapping parameters (min/max range, or directly S and Z), a crucial step called calibration is often required, particularly for Post-Training Quantization (PTQ). Calibration involves feeding a representative dataset (a subset of the training or validation data) through the original FP32 model and observing the activation ranges at various layers. These observed ranges inform the selection of quantization parameters that minimize information loss for typical inputs. The quality and representativeness of the calibration dataset significantly impact the final accuracy of the quantized model.

A Spectrum of Techniques: Exploring Quantization Methodologies

Several distinct approaches exist for implementing AI model quantization, each with its own trade-offs between ease of implementation, computational overhead during the process, and final model accuracy.

Post-Training Quantization (PTQ): Simplicity and Speed

PTQ is often the simplest method to apply. It involves quantizing a pre-trained FP32 model without requiring retraining. This makes it fast and convenient.

Static PTQ

Static PTQ requires calibration. The ranges of weights and activations are determined offline using the calibration dataset. These static ranges and derived quantization parameters (S and Z) are then used during inference. It generally offers better performance than dynamic PTQ because activation quantization parameters are pre-computed.

Dynamic PTQ

Dynamic PTQ (also known as per-tensor dynamic range quantization) typically quantizes weights offline but determines the quantization parameters for activations dynamically on-the-fly during inference based on the observed range of each activation tensor for the current input. This avoids the need for a calibration dataset for activations but introduces computational overhead during inference to calculate these parameters dynamically. It's often used when obtaining a representative calibration set is difficult or when activation ranges vary significantly with different inputs.

Quantization-Aware Training (QAT): Preserving Accuracy

QAT introduces the simulation of quantization effects during the training or fine-tuning process. It inserts "fake" quantization operations into the computational graph. These operations simulate the precision loss of quantization during the forward pass and use techniques like the Straight-Through Estimator (STE) to approximate gradients for the non-differentiable quantization step during the backward pass. By allowing the model to adapt its weights to the reduced precision during training, QAT can often recover most, if not all, of the accuracy lost due to quantization, typically outperforming PTQ, especially for more aggressive quantization (e.g., INT4 or lower) or highly sensitive models. However, it requires access to the original training pipeline and dataset and incurs additional training time.

Binary and Ternary Quantization: Extreme Compression

These are more aggressive forms where weights and/or activations are constrained to only two (+1, -1) or three (+1, 0, -1) values, respectively. This offers maximal compression and potential for significant speedup using bitwise operations but usually comes at the cost of a more substantial accuracy drop and often necessitates specialized training techniques (like QAT) to achieve acceptable performance.

Mixed-Precision Quantization: Balancing Act

Recognizing that different layers or parts of a model may have varying sensitivity to precision reduction, mixed-precision quantization applies different bit-widths to different components. More sensitive layers might retain higher precision (e.g., FP16 or INT8), while less sensitive layers could be aggressively quantized (e.g., INT4). This requires sophisticated analysis or automated search algorithms to determine the optimal precision configuration that balances accuracy and efficiency.

Navigating the Trade-offs: Accuracy vs. Efficiency

The primary challenge in AI model quantization lies in managing the inherent trade-off between computational efficiency gains and potential degradation in model accuracy. Reducing numerical precision inevitably introduces quantization error (the difference between the original FP32 value and its quantized representation).

Potential Accuracy Degradation

The accumulation of quantization errors throughout the network's layers can lead to a noticeable drop in performance on the target task (e.g., classification accuracy, object detection mAP). Models with wide dynamic ranges, sensitive activation functions, or deep architectures can be particularly susceptible.

Sensitivity Analysis and Mitigation Strategies

To counteract accuracy loss, several strategies can be employed:

- Sensitivity Analysis: Identifying which layers are most impacted by quantization allows for targeted mitigation, such as keeping those layers in higher precision (mixed-precision).

- Quantization-Aware Training (QAT): As discussed, training the model to be robust to quantization noise is often the most effective way to preserve accuracy.

- Calibration Dataset Quality: Ensuring the calibration dataset for PTQ accurately reflects the real-world data distribution is vital.

- Fine-tuning: Minor fine-tuning of the quantized model (especially after PTQ) on a small amount of data can sometimes recover lost accuracy.

- Bias Correction: Adjusting biases post-quantization can help compensate for shifts in the mean caused by the quantization process.

Real-World Applications: Where Quantization Shines

AI model quantization is not merely a theoretical optimization; it is a critical enabler for numerous practical AI deployments:

- Edge Computing and IoT Devices: Running complex models (e.g., for computer vision, keyword spotting, anomaly detection) directly on resource-limited edge devices with constrained power, compute, and memory.

- Mobile Applications: Enabling sophisticated on-device AI features in smartphones and tablets (e.g., real-time image filters, natural language processing, augmented reality) without relying solely on cloud connectivity or draining the battery.

- Large-Scale Cloud Deployments: Reducing the latency and computational cost of serving inference requests at scale in data centers, leading to lower operational expenses and improved user experience.

- Autonomous Systems: Facilitating real-time decision-making in autonomous vehicles, drones, and robotics where low latency and power efficiency are non-negotiable safety and operational requirements.

Tools and Frameworks: Enabling Quantization in Practice

The widespread adoption of quantization has been facilitated by robust support within major deep learning frameworks and specialized toolkits:

- TensorFlow Lite: Provides comprehensive tools for both PTQ (static and dynamic) and QAT, targeting deployment on mobile, embedded, and IoT devices.

- PyTorch Quantization Toolkit: Offers modules and APIs for implementing PTQ (static and dynamic) and QAT within the PyTorch ecosystem, supporting various backends (CPU, GPU).

- ONNX Runtime: Supports quantized models in the Open Neural Network Exchange (ONNX) format, enabling deployment across diverse hardware platforms with optimized execution providers.

- NVIDIA TensorRT: A high-performance inference optimizer and runtime that heavily leverages quantization (particularly INT8) to maximize throughput on NVIDIA GPUs.

- Specialized Hardware Accelerators (TPUs, NPUs): Many dedicated AI accelerators are explicitly designed to perform low-precision integer arithmetic (especially INT8 matrix multiplications) extremely efficiently, making quantization a prerequisite for unlocking their full potential.

The Future Trajectory: Innovations in AI Model Quantization

Research and development in AI model quantization continue unabated, focusing on further enhancing efficiency while minimizing accuracy loss:

- Adaptive and Learned Quantization: Developing methods where quantization parameters (bit-widths, ranges, non-linear mappings) are learned automatically during training or optimized per-instance during inference.

- Hardware-Software Co-design: Designing hardware architectures and quantization algorithms synergistically to maximize performance and efficiency for low-precision computations.

- Quantization for Emerging Architectures: Adapting and refining quantization techniques for newer model types, such as Transformers and Graph Neural Networks, which exhibit different characteristics and sensitivities compared to traditional CNNs.

- Improved Training Techniques: Creating more sophisticated QAT methods and alternatives that achieve high accuracy even at ultra-low bit-widths (e.g., sub-4-bit).

- Accuracy Guarantees and Robustness: Developing theoretical understandings and practical methods to provide guarantees on the performance degradation or improve the robustness of quantized models against adversarial attacks or domain shifts.

Quantization as a Cornerstone of Practical AI

AI model quantization has transitioned from a niche optimization technique to a fundamental requirement for deploying artificial intelligence effectively and efficiently in the real world. By drastically reducing model size, accelerating inference speed, and lowering power consumption, quantization bridges the gap between computationally intensive state-of-the-art models and the constraints of practical hardware environments. While challenges related to accuracy preservation persist, ongoing advancements in methodologies like QAT, mixed-precision deployment, and hardware co-design continue to push the boundaries. As AI becomes increasingly ubiquitous, mastering the art and science of AI model quantization will remain paramount for unlocking its full potential across the spectrum of applications, from minuscule IoT sensors to planet-scale cloud infrastructure. It represents not just an optimization, but a crucial enabler for the future of accessible, sustainable, and performant artificial intelligence.