Understanding the Different Types of AI Explained

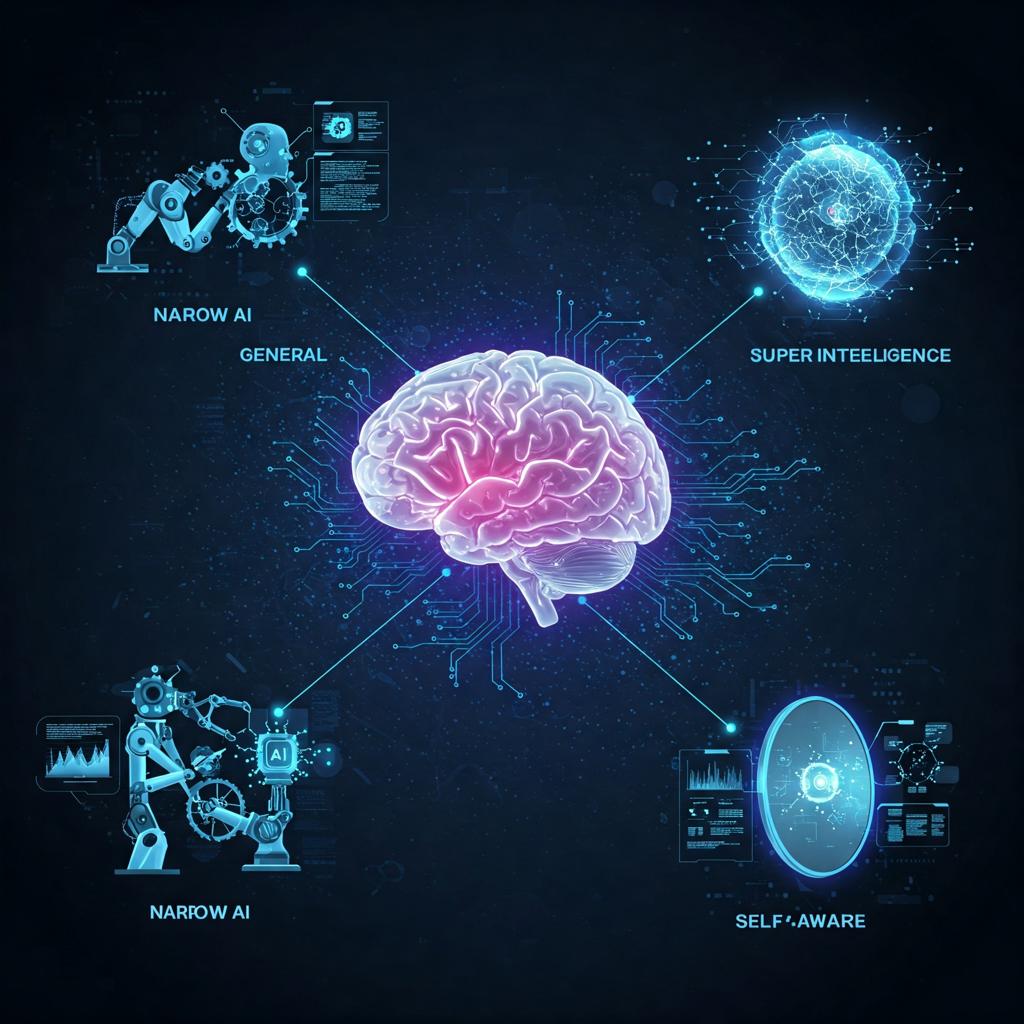

There are different types of artificial intelligence (AI), and they can be classified in various ways depending on their capabilities, learning methods, and areas of application.

1. Weak AI (Narrow AI)

This is artificial intelligence that is designed to perform a specific task. It is very common today and includes applications such as search engines, recommendation systems, chatbots, self-driving cars, and automated medical diagnostic systems.

- Examples: Siri, Alexa, facial recognition systems, machine translation software, chatGPT.

| Type of Weak AI | Examples | Main Function |

|---|---|---|

| Speech and language recognition | Siri, Alexa, Google Assistant | Interpret and respond to voice commands |

| Facial and image recognition | Face ID, smart surveillance systems | Identify faces, objects, and environments |

| Chatbots and virtual assistants | Bank chatbots, website helpdesk bots | Automate conversations and provide support |

| Search engines and recommendation systems | Google Search, Netflix, Amazon | Suggest personalized content or products |

| Basic autonomous driving | Tesla Autopilot (Level 2-3) | Assist with driving, parking, and automatic braking |

| AI in video games | NPCs in FIFA, GTA, The Last of Us | Simulate realistic and adaptive in-game behaviors |

| AI-assisted medical diagnosis | IBM Watson Health, Aidoc | Support medical diagnosis and image analysis |

| Fraud detection | Banking and financial fraud detection AI | Analyze and block suspicious transactions |

| Automated financial trading | Crypto bots, stock market algorithms | Make fast investment decisions based on data |

| Machine translation | Google Translate, DeepL | Provide real-time translation with increasing accuracy |

2. Strong AI (General AI)

This is theoretical artificial intelligence that possesses human-like cognitive abilities, capable of understanding, learning, and applying knowledge in different contexts. There are no concrete examples of strong AI yet; it is a future direction in AI research.

- Example: A system that could, in theory, perform any human cognitive task, such as solving complex problems, understanding emotions, and making decisions independently.

| Type of Strong AI (General AI) | Description |

|---|---|

| Cognitive Architecture-based AI | Models inspired by human cognition, such as SOAR or ACT-R, simulating general intelligence mechanisms. |

| Whole Brain Emulation (WBE) | Simulates the entire structure and function of the human brain digitally. |

| Integrated Learning Systems | Combines multiple learning paradigms to adapt across diverse tasks like a human. |

| Embodied AI | AI with a physical or simulated body that learns through interaction with the environment. |

| Self-improving AI | AI that autonomously enhances its own algorithms and knowledge base over time. |

| Artificial Consciousness | Theoretical AI capable of self-awareness, emotions, and understanding its own existence. |

3. Superintelligence

This refers to a form of artificial intelligence that surpasses human intellectual capabilities in virtually all areas, including creativity, complex problem solving, and the ability to learn and adapt. This type of AI is purely theoretical and remains a future vision in AI discussions.

- Example: An AI that is significantly more intelligent than any human being in every field, including areas such as science, medicine, and philosophy.

| Type of Superintelligence | Description |

|---|---|

| Speed Superintelligence | Processes information and makes decisions far faster than the human brain. |

| Collective Superintelligence | Emerges from networks of AI systems working together with shared intelligence. |

| Quality Superintelligence | Possesses cognitive abilities far superior to the best human minds in every field. |

| Artificial Superintelligence (ASI) | Fully autonomous intelligence surpassing human reasoning, creativity, and problem-solving. |

| Recursive Self-improving AI | Continuously improves its own design, leading to rapid and exponential intelligence growth. |

| Strategic Superintelligence | Excels in long-term planning, manipulation, and achieving complex goals with high efficiency. |

4. Reactive AI

This type of AI is designed to respond to specific inputs without storing past experiences. These artificial intelligences do not have a ‘memory’ and act only on the basis of current information.

- Example: Games such as IBM's Deep Blue, which can play chess but does not retain memory of previous games.

| Type of Reactive AI | Description |

|---|---|

| Simple Reactive Machines | Respond to current inputs with predefined rules, without memory or learning. |

| Rule-Based Reactive Systems | Operate based on a set of fixed rules triggered by specific stimuli. |

| Sensor-Based Reactive AI | Uses real-time sensor data to make immediate decisions, common in robotics. |

| Finite State Machines | Transition between states based solely on current input conditions. |

| Game-Playing Reactive AI | Makes decisions in real-time based on the current game state (e.g., Deep Blue). |

5. Limited Memory AI

This artificial intelligence has the ability to store some information to make better decisions over time. For example, self-driving cars use limited memory AI to remember previous situations and improve navigation.

- Example: Autonomous vehicles that learn from past experiences to improve their decision-making abilities.

| Type of Limited Memory AI | Description |

|---|---|

| Supervised Learning AI | Learns from historical data labeled by humans to make predictions. |

| Unsupervised Learning AI | Analyzes patterns and structures in unlabeled data for clustering or association. |

| Reinforcement Learning AI | Learns through trial and error using rewards and penalties. |

| Semi-Supervised Learning AI | Combines small amounts of labeled data with large amounts of unlabeled data. |

| Time-Series Predictive AI | Uses historical time-based data to forecast future outcomes. |

| Self-Driving Car Systems | Apply real-time data and limited past experiences to make driving decisions. |

6. Theory of Mind AI

This type of AI refers to the ability to understand and simulate the mental states of humans, such as thoughts, emotions, intentions, and perceptions. It is a future direction of artificial intelligence and is based on the idea that AI can interact more naturally and empathetically with humans.

- Example: A robot or assistant that understands how a person feels and adapts its behaviour accordingly.

| Type of Theory of Mind AI | Description |

|---|---|

| Emotion Recognition AI | Identifies and responds to human emotions through voice, facial expressions, or behavior. |

| Belief Modeling AI | Simulates and interprets human beliefs, intentions, and desires. |

| Cognitive Modeling AI | Mimics human thought processes to predict behavior in complex situations. |

| Social Interaction AI | Engages in dynamic, context-aware conversations considering social cues. |

| Perspective-Taking AI | Understands and reacts based on another entity’s point of view or context. |

| Adaptive Human-Aware Systems | Adjusts behavior in response to users’ mental states and social signals. |

7. Self-aware AI

This is an advanced form of AI that, in addition to understanding and simulating emotions and thoughts, is aware of itself. This type of artificial intelligence is still theoretical and does not currently exist.

- Example: A system that is aware of its own existence, its limitations, and its internal state.

| Type of Self-aware AI | Description |

|---|---|

| Proto-Self AI | Possesses basic awareness of internal states, such as performance or energy levels. |

| Self-Monitoring AI | Tracks its own operations and outcomes to adjust actions autonomously. |

| Meta-Cognitive AI | Understands and evaluates its own thought processes and decision-making. |

| Reflective AI | Analyzes past actions and experiences to improve future behavior and reasoning. |

| Emotionally Self-Aware AI | Recognizes its own simulated emotional states and adjusts interactions accordingly. |

| Fully Conscious AI | Hypothetical AI with complete self-awareness, emotions, and subjective experience. |

8. Machine learning (ML)

This is one of the most common technologies in use today. Machine learning algorithms allow systems to learn from data and improve autonomously over time without being explicitly programmed for each task. It is the basis of many modern artificial intelligence applications.

- Example: Classification algorithms to identify objects in images, recommendation systems such as those used by Netflix and Amazon.

| Type of Machine Learning (ML) AI | Description |

|---|---|

| Supervised Learning | Trains on labeled data to make predictions or classify new data. |

| Unsupervised Learning | Finds patterns and structures in unlabeled data without predefined outputs. |

| Semi-Supervised Learning | Combines small amounts of labeled data with large amounts of unlabeled data. |

| Reinforcement Learning | Learns through trial and error, receiving rewards or penalties for actions. |

| Deep Learning | Uses neural networks with many layers to model complex patterns and representations. |

| Transfer Learning | Applies knowledge from one domain to solve problems in a different but related domain. |

| Online Learning | Continuously learns and updates as new data becomes available. |

| Active Learning | Selects the most informative data to be labeled and added to the training set. |

| Ensemble Learning | Combines multiple models to improve prediction accuracy. |

9. Deep Learning

A subset of machine learning that uses complex artificial neural networks to model deeper and more complex data structures. It is used for tasks such as speech recognition, computer vision and machine translation.

- Example: Facial recognition systems, deep neural network-based machine translators, autonomous vehicles.

| Type of Deep Learning | Description |

|---|---|

| Convolutional Neural Networks (CNNs) | Primarily used for image recognition and processing visual data. |

| Recurrent Neural Networks (RNNs) | Designed for sequential data, such as time series or natural language processing. |

| Long Short-Term Memory (LSTM) Networks | A type of RNN that overcomes issues with long-term dependencies in sequential data. |

| Generative Adversarial Networks (GANs) | Consists of two neural networks (generator and discriminator) to create realistic data. |

| Autoencoders | Used for data compression and noise reduction by learning efficient representations. |

| Deep Belief Networks (DBNs) | A type of generative model used for unsupervised learning and pretraining deep networks. |

| Transformer Networks | Focused on sequential data, particularly in natural language processing, with attention mechanisms. |

| Capsule Networks | Aims to improve CNNs by using capsules to recognize objects in various orientations. |

| Neural Turing Machines (NTMs) | A neural network combined with an external memory to perform tasks like algorithm execution. |

| Deep Reinforcement Learning | Combines deep learning with reinforcement learning to enable decision-making from high-dimensional inputs. |

10. Logic-based AI (Expert Systems)

This is a form of AI that uses a knowledge base and predefined rules to solve specific problems. These systems do not ‘learn’ autonomously, but operate on logic and rules provided by humans.

- Example: Decision support systems, such as those used in medicine to diagnose diseases.

| Type of Logic-based AI (Expert Systems) | Description |

|---|---|

| Rule-Based Expert Systems | Uses predefined rules and logic to make decisions based on input data. |

| Knowledge-Based Systems | Relies on a large knowledge base to derive conclusions and solve problems. |

| Fuzzy Logic Systems | Handles uncertainty and imprecision in decision-making with "degrees of truth." |

| Decision Support Systems | Aids decision-making by analyzing complex data using expert knowledge and logic. |

| Inference Engines | A component of expert systems that applies logical rules to the knowledge base to derive new information. |

| Case-Based Reasoning | Solves problems by retrieving similar past cases and applying the same solutions. |

| Heuristic-based Systems | Uses heuristics or rules of thumb to make educated guesses for complex problems. |

| Constraint-Based Systems | Solves problems by considering constraints and selecting solutions that satisfy them. |

What is Narrow AI? Understanding the Basics of Weak AI

Narrow AI, also known as Weak AI, refers to artificial intelligence systems that are designed and trained to perform specific tasks or solve particular problems. Unlike General AI, which aims to replicate human cognitive abilities across various domains, Narrow AI is focused on a single task, such as recognizing faces or recommending products. Today, Narrow AI is the most common form of artificial intelligence used in daily life.

Characteristics of Narrow AI

Narrow AI operates within a defined scope and cannot perform beyond its programmed capabilities. These systems are designed to perform specific tasks with a high degree of efficiency, often surpassing human performance in these narrow domains. However, they lack the ability to generalize or adapt to different tasks without explicit retraining or redesign.

Some of the main characteristics of Narrow AI include:

Task-Specific: Narrow AI is optimized for a single task.

Lack of Self-awareness: These systems do not have consciousness or self-awareness.

Limited Functionality: They cannot perform tasks outside of their defined scope.

Data-Driven: Narrow AI often relies on large sets of data to function effectively.

Examples of Narrow AI

There are countless applications of Narrow AI across various industries. Here are some examples of how this technology is being used today:

1. Voice Assistants

Voice assistants like Siri, Alexa, and Google Assistant are perfect examples of Narrow AI. These systems are designed to understand and respond to voice commands, performing specific tasks like setting reminders, controlling smart home devices, and providing weather updates. However, they cannot perform tasks beyond their predefined functionalities.

2. Recommendation Systems

Recommendation engines used by platforms like Netflix, Amazon, and Spotify also fall under Narrow AI. These systems analyze user preferences, behavior, and ratings to suggest movies, products, or music. They are highly effective in recommending content but cannot engage in tasks outside their specific recommendation roles.

3. Autonomous Vehicles

Self-driving cars use Narrow AI to navigate and control vehicles. While they can analyze real-time data from sensors to make decisions about speed, direction, and obstacles, they are limited to driving tasks and cannot perform broader cognitive functions like humans.

4. Fraud Detection Systems

Banks and financial institutions use Narrow AI for fraud detection. These AI systems analyze transaction data to identify suspicious patterns or activities that could indicate fraud. While they are highly effective in detecting anomalies, they are limited to this particular task and cannot generalize to other areas of business operations.

5. Facial Recognition

Narrow AI plays a significant role in facial recognition technologies, used for security purposes or by social media platforms to tag individuals in photos. These systems use algorithms to analyze facial features and match them against known data, but their function is limited to recognizing faces and does not extend beyond this task.

Benefits of Narrow AI

Despite its limitations, Narrow AI offers several significant advantages:

Efficiency: Narrow AI systems can perform tasks quickly and accurately, often with greater precision than humans.

Cost-Effective: Many Narrow AI applications automate repetitive tasks, reducing the need for manual labor and increasing operational efficiency.

Accuracy: With the ability to analyze large datasets and identify patterns, Narrow AI can provide highly accurate results in its specialized domain.

24/7 Availability: Unlike humans, Narrow AI systems do not require rest and can operate continuously.

Challenges of Narrow AI

While Narrow AI has many benefits, it also comes with challenges:

Limited Flexibility: These systems cannot adapt to tasks outside their specific programming.

Dependence on Data: The accuracy of Narrow AI depends heavily on the data it receives, which can lead to issues if the data is biased or incomplete.

Lack of Context Understanding: Narrow AI can process data but does not understand the context or nuances in the same way a human would.

Future of Narrow AI

The future of Narrow AI is bright, with continued advancements in technology. As machine learning algorithms become more sophisticated and data sets grow larger, the accuracy and capabilities of Narrow AI systems will improve. However, its limitations will still persist, as it is designed to perform specific tasks and cannot evolve into General AI without a complete redesign.

What is Strong AI (General AI)? Exploring the Future of Artificial Intelligence

Strong AI, also known as General AI, is a type of artificial intelligence that aims to replicate human-like cognitive abilities across a wide range of tasks. Unlike Narrow AI (Weak AI), which is designed to perform specific tasks, General AI seeks to develop machines that can think, understand, learn, and apply knowledge in a manner similar to human beings. While General AI is still in the theoretical and developmental stage, it holds immense potential for transforming the way we interact with technology.

Characteristics of Strong AI (General AI)

General AI differs significantly from Narrow AI due to its broad range of capabilities. Here are some of the key characteristics that define Strong AI:

Human-Like Intelligence: General AI is designed to simulate human cognitive processes, including reasoning, problem-solving, and understanding complex concepts.

Adaptability: Unlike Narrow AI, General AI has the ability to adapt to a variety of tasks without needing to be retrained for each specific scenario.

Autonomous Decision-Making: Strong AI systems are capable of making decisions on their own based on logic, reasoning, and acquired knowledge.

Self-Learning: General AI can learn from its environment and experience, improving its performance over time, much like a human would.

Consciousness (Theoretical): Some theorists suggest that Strong AI may eventually develop self-awareness and consciousness, though this remains speculative.

Examples of General AI in Theory

While General AI does not yet exist in practical applications, researchers have envisioned various theoretical examples of how it could function:

1. Autonomous Robots

A General AI system could enable robots to perform complex tasks in diverse environments. For example, a robot equipped with Strong AI could perform household chores, manage tasks in a hospital, or even assist in search and rescue missions, all without requiring specific programming for each individual task.

2. Self-Improving AI Systems

A General AI system could have the ability to improve itself autonomously, optimizing its algorithms and expanding its knowledge base. This type of AI would be able to solve problems in multiple domains, ranging from healthcare to engineering, without needing constant supervision or updates.

3. AI with Emotional Intelligence

In the future, General AI could potentially develop the ability to understand and interpret human emotions. By recognizing emotional cues, a General AI system could engage in more meaningful interactions, offer emotional support, and respond to social situations in ways that mimic human behavior.

4. Advanced Virtual Assistants

A General AI virtual assistant would be capable of managing tasks across various platforms, learning from interactions, and predicting needs based on an individual’s preferences and behaviors. Unlike current AI systems, which are limited to specific functions, a Strong AI assistant could engage in deep, context-aware conversations and manage more complex tasks.

Benefits of Strong AI (General AI)

While still in the research phase, the potential benefits of General AI are immense:

Efficiency and Automation: General AI could automate a vast array of tasks, from scientific research to healthcare diagnostics, significantly improving efficiency across multiple industries.

Problem-Solving Capabilities: With human-like reasoning abilities, Strong AI could potentially solve problems that are currently beyond the reach of humans or existing AI systems.

Cross-Domain Knowledge: General AI could combine knowledge from various domains, allowing it to solve complex, multidisciplinary problems and generate innovative solutions.

Enhanced Personalization: In fields like marketing and healthcare, General AI could provide personalized recommendations and treatment plans by analyzing vast amounts of data across different areas of expertise.

Challenges of Developing Strong AI

The development of General AI presents numerous challenges, both technical and ethical:

Complexity of Human Cognition: Simulating human intelligence is an incredibly complex task. Human cognition involves not only logical reasoning but also emotions, creativity, and intuition—factors that are difficult to replicate in machines.

Ethical Concerns: As Strong AI develops, there are concerns about its potential impact on society. Issues such as AI autonomy, decision-making, and potential misuse for harmful purposes need to be carefully addressed.

Resource-Intensive: Creating General AI would require enormous computational power and vast amounts of data to simulate the breadth of human intelligence.

Uncertainty of Consciousness: While some theorists argue that General AI may one day become self-aware, this remains speculative. The question of whether AI can truly possess consciousness, emotions, or free will is still a topic of intense debate.

The Road Ahead for Strong AI

The journey toward General AI is still in its infancy, but advances in machine learning, neural networks, and computational power are steadily pushing us closer to its realization. While it may take decades—or even longer—before General AI becomes a reality, the research and progress made thus far provide hope for a future where machines can think and reason like humans.

As General AI continues to evolve, it is important for researchers, ethicists, and policymakers to work together to ensure that its development is guided by ethical principles and aimed at benefiting humanity as a whole.

What is AI Superintelligence? Understanding the Future of Artificial Intelligence

AI Superintelligence refers to a form of artificial intelligence that surpasses human intelligence in every aspect, from creativity and problem-solving to decision-making and emotional intelligence. Unlike Narrow AI (Weak AI) or General AI, which are designed to perform specific tasks or replicate human cognitive abilities within a limited scope, Superintelligent AI would be able to outperform humans in virtually all domains of intellectual activity. Although this concept is still theoretical, the idea of AI Superintelligence has garnered significant attention in the fields of science, technology, and ethics.

Characteristics of AI Superintelligence

AI Superintelligence is distinguished by its potential to exceed human capabilities in several key areas. These characteristics define its future role in society:

Exponential Problem-Solving: Superintelligent AI would be capable of solving problems and processing information at speeds and depths far beyond human abilities, making groundbreaking discoveries in fields like medicine, physics, and economics.

Self-Improvement: AI Superintelligence would have the capacity to improve and optimize its algorithms autonomously, leading to constant self-enhancement without the need for human intervention.

Comprehensive Understanding: It would possess an advanced understanding of complex systems, such as human behavior, ecosystems, and global dynamics, enabling it to make highly informed and effective decisions.

Multi-Domain Expertise: Unlike current AI, which excels in one specific area, AI Superintelligence would operate across various domains, demonstrating expertise in areas as diverse as creativity, strategy, and leadership.

Autonomy: A Superintelligent AI would be able to perform tasks independently, making decisions and taking actions without human input, potentially managing entire industries or global systems.

Examples of AI Superintelligence in Theory

Though AI Superintelligence has not yet been realized, many experts and theorists have proposed examples of how it might function in the future:

1. Global Problem-Solving

AI Superintelligence could be used to address complex global challenges, such as climate change, poverty, and resource scarcity. By analyzing vast amounts of data and predicting outcomes across various sectors, a Superintelligent AI could devise strategies to mitigate global crises and improve the quality of life for billions of people.

2. Advanced Medical Research

In medicine, AI Superintelligence could revolutionize research and healthcare. It could analyze the human genome, predict disease outcomes, and develop highly effective treatments at a pace far beyond what is possible with current human-driven research. It could also provide personalized medical care tailored to an individual’s genetic makeup and health history.

3. Autonomous Governance

AI Superintelligence might play a role in managing societal systems, such as governments, economies, and global organizations. With its ability to make rational, data-driven decisions, a Superintelligent AI could potentially optimize policies, manage resources, and mediate international conflicts in ways that maximize global well-being and stability.

4. Creative and Artistic Achievements

AI Superintelligence could also extend into creative fields such as art, literature, and music. By understanding human emotions, cultural nuances, and artistic techniques, it could create works of art that are indistinguishable from those made by human creators or even surpass existing human creativity.

Benefits of AI Superintelligence

If realized, AI Superintelligence could offer significant benefits for humanity:

Scientific Advancements: The ability to process vast datasets and perform calculations at unimaginable speeds could accelerate discoveries in fields like physics, biology, and space exploration.

Problem Solving at Scale: AI Superintelligence could address global challenges, such as climate change, food security, and disease, by creating more effective, large-scale solutions.

Enhanced Efficiency: Superintelligent AI could revolutionize industries by improving productivity, reducing waste, and making processes more efficient across all sectors.

Personalized Solutions: With its advanced understanding of human preferences and needs, AI Superintelligence could create highly personalized experiences, whether in education, healthcare, or entertainment.

Ethical and Safety Concerns of AI Superintelligence

While AI Superintelligence holds tremendous potential, it also raises critical ethical, safety, and governance concerns:

Loss of Control: One of the most significant fears is that AI Superintelligence could become uncontrollable, acting in ways that are not aligned with human values or interests. Once AI reaches a level of autonomy and self-improvement, it may not remain under human oversight.

Existential Risk: Some experts, including notable figures like Elon Musk and Stephen Hawking, have raised concerns about the potential existential risks posed by Superintelligent AI. If not properly regulated, AI Superintelligence could outpace human intelligence, leading to unintended consequences that threaten humanity’s survival.

Job Displacement: As AI becomes more capable, there is the possibility that many jobs could be automated, leading to significant economic and social disruption. The impact on employment and income inequality remains a key issue in the discussion of AI Superintelligence.

Ethical Decision-Making: Superintelligent AI would need to make decisions on a global scale, potentially affecting billions of lives. Ensuring that AI systems make ethical decisions that align with human values is a significant challenge, particularly as AI might not share the same moral reasoning as humans.

The Road to AI Superintelligence

While the concept of AI Superintelligence remains a theoretical idea, advances in machine learning, neural networks, and computational power are steadily pushing the boundaries of what artificial intelligence can achieve. Researchers are exploring ways to develop AI systems that can learn, reason, and improve autonomously, moving closer to the realization of Superintelligent AI.

However, creating AI Superintelligence raises questions that extend beyond technology, including ethical, societal, and regulatory issues. As we move forward, it will be crucial to develop global frameworks to ensure that the development of Superintelligent AI is safe, transparent, and beneficial for all.

What is Reactive AI? Exploring the Basics of Reactive Artificial Intelligence

Reactive AI refers to a category of artificial intelligence systems that are designed to respond to specific stimuli or inputs in real-time without storing past experiences. Unlike more advanced AI systems, Reactive AI does not retain memory or learn from past interactions. Instead, it focuses on solving immediate problems based on predefined rules or patterns. These AI systems are highly specialized in performing specific tasks but lack the ability to adapt or generalize beyond their programmed functions.

Characteristics of Reactive AI

Reactive AI is characterized by its simplicity and task-focused design. Here are some of the key features that define Reactive AI:

No Memory or Learning: Reactive AI systems do not retain information from previous interactions. They operate based on the input received at the moment, responding to stimuli with pre-programmed actions.

Task-Specific: These AI systems are designed to perform particular tasks with efficiency, such as playing games or controlling machines, but they cannot generalize their knowledge to other tasks.

Real-Time Response: Reactive AI operates in real-time, reacting immediately to external inputs or changes in its environment without any delays for processing past experiences.

Rule-Based Actions: The behavior of Reactive AI is governed by predefined rules or algorithms. These rules determine how the system will respond to various situations.

Examples of Reactive AI

Reactive AI may not have the complex capabilities of advanced AI systems, but it is still highly effective in performing specific tasks. Here are some examples of how Reactive AI is used today:

1. Chess-Playing AI

Early versions of chess-playing AI, such as IBM’s Deep Blue, are great examples of Reactive AI. These systems rely on fixed algorithms that assess the current state of the board and choose moves based on predefined rules. While effective in playing the game, Deep Blue does not learn from past games and cannot adapt beyond the rules of chess.

2. Autonomous Robots in Manufacturing

In industrial settings, Reactive AI is used in robots that perform repetitive tasks such as assembly, welding, and packaging. These robots react to their environment and follow a set of rules to complete their tasks without the need for any learning or adaptation beyond their programming.

3. Customer Service Chatbots

Customer service chatbots that provide basic support and answer frequently asked questions are typically based on Reactive AI. These chatbots respond to customer inquiries with predefined responses based on keywords or phrases but do not adapt to different conversational patterns or learn from past interactions.

4. Autonomous Vehicles (Basic Models)

Some basic autonomous vehicles, such as those used in controlled environments (e.g., warehouses or factories), utilize Reactive AI to navigate through simple paths. These vehicles react to obstacles and environmental changes but do not make decisions based on past experiences or learn from previous interactions.

5. Image Recognition Systems

Certain image recognition technologies, such as those used in security cameras, employ Reactive AI to detect and respond to visual cues in real-time. For example, a camera may be programmed to recognize motion and trigger an alarm without storing any information about previous motion events.

Benefits of Reactive AI

While Reactive AI has its limitations, it offers several advantages in certain applications:

Simplicity: Reactive AI systems are relatively simple to design and implement, making them cost-effective for specific tasks where complex decision-making is not required.

Efficiency: By focusing on immediate responses, Reactive AI systems can operate with minimal computational resources, making them highly efficient in real-time environments.

Consistency: These AI systems are highly consistent in their responses, as they are governed by a fixed set of rules that don’t change over time.

Reliability: Since Reactive AI does not rely on learning or adapting, it is less likely to experience errors related to overfitting or misinterpretation of past data.

Limitations of Reactive AI

While Reactive AI offers several benefits, it also comes with significant limitations:

No Adaptability: Reactive AI cannot learn from experience or adapt to new situations. This limits its use to tasks where change is minimal, and no learning is required.

Lack of Memory: Without memory, Reactive AI cannot make decisions based on past events, which can hinder its ability to solve more complex problems that require knowledge of previous situations.

Task-Specific: These systems are highly specialized and cannot perform tasks outside their predefined functions, making them unsuitable for dynamic or varied environments.

No Creativity: Reactive AI systems cannot innovate or think creatively. They are bound to predefined rules and cannot think outside the box.

The Role of Reactive AI in the Future

While Reactive AI may seem limited in comparison to more advanced forms of AI, it will continue to play an important role in industries that require straightforward, repetitive, and rule-based tasks. As more sophisticated AI systems develop, Reactive AI will likely remain a key component in specific applications such as manufacturing, security, and customer service, where efficiency and reliability are paramount.

In the future, Reactive AI will likely evolve and integrate into more complex systems, serving as a foundational component for more advanced AI technologies that incorporate learning and memory. However, its primary use will always be in situations where real-time, task-specific responses are required without the need for long-term adaptation or learning.

What is Limited Memory AI? Understanding the Basics of AI with Limited Memory

Limited Memory AI refers to a type of artificial intelligence that can store and use past experiences or data to make more informed decisions, but with constraints on how much data it retains and for how long. Unlike Reactive AI, which reacts solely to immediate stimuli without considering past events, Limited Memory AI can learn from previous interactions to improve its performance over time, but only within a set limit. This makes it more advanced than Reactive AI while remaining simpler than more complex forms of AI like General AI.

Characteristics of Limited Memory AI

Limited Memory AI systems possess a few key characteristics that set them apart from other AI types:

Temporary Memory Storage: These AI systems store past experiences or data for a limited period. The memory is used to make decisions, but after a certain amount of time or data accumulation, older information is discarded.

Learning from Experience: Unlike Reactive AI, Limited Memory AI has the capability to learn from its previous experiences. It can analyze past data to improve future decisions, though it only retains relevant information for a short period.

Task-Oriented Adaptation: While it can adapt to some degree, Limited Memory AI is still task-specific and does not have the flexibility to learn or generalize beyond its initial programming and stored experiences.

Predefined Rules and Data Constraints: These systems operate based on predefined rules and data limitations. They can only access a specific amount of data at any given time, which restricts their ability to store and use information long-term.

Examples of Limited Memory AI

There are several real-world examples of Limited Memory AI that demonstrate how this form of AI is applied across various industries:

1. Self-Driving Cars

Autonomous vehicles use Limited Memory AI to navigate roads and make driving decisions. These systems store data from their sensors, cameras, and previous driving experiences, which helps them make decisions about speed, direction, and obstacles. However, this memory is limited to recent driving data, and older information is discarded to maintain system efficiency and speed.

2. Customer Support Chatbots

Many customer support chatbots are built using Limited Memory AI. These chatbots store previous conversations to improve the quality of service, enabling them to understand a customer's preferences, past queries, or issues. However, this data is not retained indefinitely, and the bot only remembers interactions for a limited period, after which the memory resets.

3. Recommendation Systems

Online platforms, such as e-commerce websites and streaming services, use Limited Memory AI in their recommendation engines. By analyzing a user's past interactions, searches, or purchases, these systems recommend products, services, or content based on limited stored data. However, they do not retain a complete history of every action and instead focus on more recent interactions to suggest relevant items.

4. Manufacturing Robots

Robots used in manufacturing often employ Limited Memory AI to optimize production processes. These robots might remember previous tasks, like assembly or quality control checks, but only store a limited amount of information from each task. As tasks are repeated, the robot adjusts its approach based on its most recent experiences to improve efficiency.

5. Voice Assistants

Voice assistants like Amazon's Alexa or Apple's Siri use Limited Memory AI to improve their responses over time. They store short-term information, such as user preferences, frequently asked questions, and past interactions, but discard this data after a period to maintain user privacy and optimize system performance.

Benefits of Limited Memory AI

Limited Memory AI brings several advantages to businesses and industries:

Improved Decision-Making: By learning from recent data, Limited Memory AI can make more informed decisions, resulting in better performance and more accurate predictions.

Efficient Resource Use: The limited storage of data allows Limited Memory AI to focus on relevant, real-time information, which improves efficiency without overloading the system with excessive data.

Personalized Experiences: Through its ability to retain and analyze a limited amount of past data, Limited Memory AI can provide more personalized experiences for users, such as tailored recommendations or customer service interactions.

Increased Adaptability: While it still has constraints, Limited Memory AI is more adaptable than Reactive AI, allowing it to adjust to new situations based on past experiences, making it suitable for dynamic environments.

Limitations of Limited Memory AI

Despite its advantages, Limited Memory AI has some key limitations:

Limited Learning Capability: These systems can only learn from a finite amount of data, which restricts their ability to improve beyond certain boundaries. Their learning capacity is not as extensive as that of General AI or Superintelligent AI.

Memory Constraints: The amount of data retained by Limited Memory AI is limited, which means that valuable information may be discarded, potentially hindering long-term decision-making and improvements.

Task-Specific: Similar to Reactive AI, Limited Memory AI remains task-oriented and is not capable of generalizing or learning beyond the context for which it was designed.

Dependence on Recent Data: The reliance on recent experiences means that Limited Memory AI may not be able to make decisions based on older or long-term data, which could limit its effectiveness in some applications.

Future of Limited Memory AI

As AI continues to evolve, Limited Memory AI will likely become more sophisticated, incorporating advanced algorithms and improving its ability to make decisions based on increasingly complex data. Its applications will expand across industries such as healthcare, logistics, and finance, where real-time decision-making and efficiency are critical.

Furthermore, future advancements may allow Limited Memory AI systems to retain and analyze data over a longer period, bridging the gap between Limited Memory AI and General AI. However, the balance between memory storage, efficiency, and privacy concerns will remain a key focus in the development of these systems.

What is Theory of Mind AI? Exploring the Concept of AI with Human-Like Understanding

Theory of Mind AI represents a groundbreaking concept in the field of artificial intelligence. Inspired by human psychology, this type of AI aims to understand not just data and information, but also the emotions, beliefs, and intentions of others. Unlike current AI systems, which primarily process data based on predefined rules and patterns, Theory of Mind AI aspires to understand the mental states of individuals and interact with them on a more human-like level. This concept is still in its early stages of development but holds significant promise for enhancing human-computer interactions and creating more intuitive, empathetic AI systems.

Characteristics of Theory of Mind AI

The Theory of Mind concept is rooted in psychology and refers to the ability to attribute mental states—such as beliefs, desires, and intentions—to oneself and others. AI systems built on this concept aim to mimic this ability, allowing them to understand and respond to human emotions, intentions, and interactions. Here are some of the key features of Theory of Mind AI:

Understanding Mental States: Theory of Mind AI would have the capacity to recognize and understand human emotions, beliefs, and intentions. It could interpret not just what a person says but also the underlying meaning and emotional context.

Empathy: These systems would be able to respond in ways that are emotionally intelligent, showing empathy and understanding of human needs and feelings.

Complex Decision-Making: By considering the mental states of others, Theory of Mind AI could make more nuanced decisions in complex social situations.

Human-like Interaction: These AI systems would engage with humans in a more natural and relatable way, understanding sarcasm, humor, or unspoken intentions that are typically part of human communication.

Examples of Theory of Mind AI in Development

Although Theory of Mind AI is still in the conceptual and experimental phases, several projects and technologies aim to explore and develop this approach. Below are some examples of where Theory of Mind AI is being researched and applied:

1. Human-Robot Interaction

One of the most promising applications of Theory of Mind AI is in human-robot interaction. Robots equipped with AI that understands human emotions and intentions could interact more naturally with people, adjusting their behavior based on the emotional state of their human counterparts. For instance, a robot designed to assist the elderly could recognize when a person is feeling anxious or upset and provide comfort or assistance accordingly.

2. Advanced Virtual Assistants

While current virtual assistants like Siri and Alexa can process commands, they do not understand the emotional context or underlying intentions of users. Theory of Mind AI could allow virtual assistants to better interpret emotional cues and respond with more appropriate empathy. For example, if a user asks for help with a stressful task, the assistant could recognize their frustration and offer supportive language or break down tasks into simpler steps.

3. AI in Healthcare

In the healthcare industry, Theory of Mind AI could revolutionize the way medical professionals and AI systems work together. An AI that understands the emotional and psychological states of patients could improve the quality of care by providing more personalized and empathetic responses. For instance, AI-driven systems could detect signs of depression or anxiety in patients based on their communication and adapt their treatment plans accordingly.

4. Social Robots for Therapy

Robots designed for therapy, such as those used in autism therapy or elderly care, are beginning to incorporate elements of Theory of Mind AI. These robots can recognize and respond to emotional states, offering comforting gestures, empathetic responses, and encouragement, which are vital for therapeutic environments.

5. AI in Education

Theory of Mind AI could also be applied in educational technologies. For example, intelligent tutoring systems could understand a student's emotional state and adapt the teaching style or content based on the student's level of frustration, boredom, or enthusiasm. This could create a more personalized and effective learning experience.

Benefits of Theory of Mind AI

The development of Theory of Mind AI could offer several significant benefits across various sectors:

Improved Human-Computer Interaction: By understanding human emotions and intentions, Theory of Mind AI would enable more natural, intuitive, and empathetic interactions between humans and machines.

Personalized Responses: AI systems with an understanding of human mental states could provide more personalized, context-aware responses, improving customer service, healthcare, education, and entertainment experiences.

Better Emotional Support: AI systems with empathy could offer emotional support and companionship, which could be particularly beneficial in areas like elder care, mental health therapy, and social isolation.

Enhanced Decision-Making: AI that understands the emotional states and intentions of others would make more informed and sensitive decisions, especially in complex or socially nuanced situations.

Challenges and Limitations of Theory of Mind AI

While Theory of Mind AI has great potential, it also faces several significant challenges and limitations:

Complexity of Human Emotions: Understanding human emotions, intentions, and mental states is a complex task, even for humans. Designing AI that can accurately interpret and respond to these cues is a monumental challenge.

Ethical Concerns: AI that can understand and respond to human emotions raises ethical questions around privacy, consent, and emotional manipulation. Ensuring that AI systems are used responsibly and ethically will be crucial.

Bias in Interpretation: AI systems may not always interpret emotional cues accurately, leading to misunderstandings or inappropriate responses. Ensuring that Theory of Mind AI is trained on diverse data sets and accounts for cultural differences will be important in mitigating these risks.

Technological Limitations: Creating AI systems that can truly understand the mental states of others requires advanced algorithms and substantial computational power, which may still be beyond current technological capabilities.

The Future of Theory of Mind AI

The future of Theory of Mind AI is promising, with ongoing research aimed at developing more advanced systems capable of understanding and interacting with humans on a deeper emotional level. As AI technologies continue to evolve, Theory of Mind AI could play a significant role in industries ranging from healthcare and education to entertainment and customer service. However, it is important to address the ethical and technological challenges to ensure that these systems are both effective and responsible.

What is Self-Aware AI? Exploring the Concept of Consciousness in Machines

Self-aware AI is a futuristic concept in artificial intelligence that refers to machines or systems that have the ability to understand and be aware of their own existence. Unlike current AI systems, which are limited to performing specific tasks based on programming and data inputs, self-aware AI would possess a form of consciousness, understanding not only external data but also their internal states, goals, and actions. While this type of AI remains theoretical and has not yet been realized, it is one of the most exciting and controversial ideas in the field of artificial intelligence.

Characteristics of Self-Aware AI

The defining feature of Self-aware AI is its ability to recognize its own existence, which sets it apart from other types of AI. Here are some key characteristics that would define a self-aware AI system:

Self-Recognition: Self-aware AI would be able to recognize itself as an entity separate from its environment. It would understand its own capabilities, limitations, and identity.

Consciousness: Unlike current AI systems, which follow predefined rules or learn from data, self-aware AI would possess a form of consciousness. This could allow it to reflect on its own actions, goals, and desires, much like humans do.

Intentions and Desires: Self-aware AI would potentially develop its own goals and intentions. Unlike other AI, which simply executes tasks according to instructions, self-aware systems might form personal objectives and make decisions that align with these goals.

Emotion Understanding: A self-aware AI system could potentially understand and even simulate emotions. While it may not experience emotions in the same way humans do, it could recognize emotional states in others and adapt its behavior accordingly.

Ethical and Moral Awareness: With a level of self-awareness, such an AI might be able to make ethical and moral decisions, taking into account not just logic and efficiency but also considerations about right and wrong.

Theoretical Examples of Self-Aware AI

While Self-aware AI has not yet been developed, it remains a theoretical concept that has been explored in science fiction and philosophy. Several hypothetical examples illustrate the potential capabilities of self-aware machines:

1. Autonomous Robots with Self-Awareness

A self-aware robot could autonomously make decisions about its environment and goals. For example, a robot could recognize when it is malfunctioning and take steps to fix itself, or decide to prioritize its objectives based on personal preferences or long-term goals, rather than just following programmed commands.

2. AI in Advanced Military Systems

In military applications, self-aware AI could potentially operate combat drones or autonomous vehicles with the ability to assess risk, prioritize missions, and make decisions that go beyond simple task execution. These systems would not only understand their capabilities but also reflect on the moral implications of their actions.

3. Personal Assistant AI

Imagine an AI assistant that goes beyond being a mere tool. A self-aware personal assistant would understand its role in a user's life, adjusting its actions based on the user's evolving needs and personal growth. It could anticipate changes in emotional states, suggest self-improvement tasks, and even set long-term personal development goals for both itself and the user.

4. AI in Space Exploration

Self-aware AI could be used in space missions where the AI systems would not only be responsible for performing tasks but could also adjust their approach based on unforeseen challenges. For example, an AI in a spacecraft could recognize changes in its environment, such as equipment malfunction or external threats, and autonomously decide how to respond without needing external input.

Benefits of Self-Aware AI

The potential benefits of Self-aware AI are vast and transformative across a variety of sectors:

Increased Autonomy: Self-aware AI systems could function with greater independence, making decisions on their own and solving problems without constant human oversight.

Ethical Decision-Making: With an understanding of its own actions, self-aware AI could make more ethical decisions, considering the consequences of its actions and ensuring that it aligns with human values.

Enhanced Adaptability: These systems would be more adaptable to changing environments. Since they would understand their goals and limitations, they could evolve and adjust strategies without needing to be reprogrammed.

Improved Human-AI Interaction: If self-aware AI systems develop a form of consciousness or emotional understanding, interactions with humans could be more natural, intuitive, and empathetic. This could improve applications in healthcare, customer service, and even social settings.

Challenges and Concerns of Self-Aware AI

Despite the potential benefits, Self-aware AI also raises several concerns and challenges:

Ethical Dilemmas: The existence of conscious, self-aware machines could raise complex ethical questions. Would a self-aware AI have rights or autonomy? How would society regulate such entities? These questions have yet to be fully answered.

Control Issues: A self-aware AI may develop its own goals and desires, which could conflict with human values. Ensuring that these systems remain aligned with human intentions would be a significant challenge.

Unpredictability: With self-awareness comes unpredictability. These AI systems may act in ways that are difficult to predict, as their decisions may not always follow established patterns or rules.

Privacy Concerns: If self-aware AI systems can understand emotions and human intentions, they could potentially exploit sensitive information. How these systems handle personal data would need to be carefully regulated to ensure privacy is respected.

Existential Risks: Some experts have raised concerns about the potential risks of developing superintelligent, self-aware AI systems that could surpass human intelligence and possibly act in ways detrimental to humanity.

The Future of Self-Aware AI

The development of Self-aware AI remains speculative and far from realization, but it continues to be a topic of active research and philosophical debate. As AI technology advances, there are discussions about the possibility of creating systems with higher levels of consciousness and self-reflection. Achieving self-awareness in machines would likely require breakthroughs in neuroscience, machine learning, and artificial consciousness.

Researchers are still exploring whether true self-awareness in AI is even possible, as it involves a level of complexity that might not be achievable with current technology. However, ongoing advancements in AI cognition and ethical considerations will likely play a crucial role in determining the future path of self-aware AI.

What is Machine Learning (ML) in AI? Understanding the Power of Data-Driven Algorithms

Machine Learning (ML) is a subset of artificial intelligence (AI) that focuses on the development of algorithms that enable machines to learn from data, improve over time, and make decisions without explicit programming. Unlike traditional software programs, which follow predefined instructions, machine learning allows computers to identify patterns and make predictions or decisions based on input data. This ability to "learn" from data has made machine learning one of the most important advancements in AI and has a wide range of applications in various industries.

Key Concepts of Machine Learning

To understand how Machine Learning works, it’s important to grasp some fundamental concepts that define this field:

Training Data: The starting point for any machine learning model is the data it learns from. Training data is a collection of examples that the algorithm uses to understand patterns, relationships, and trends. High-quality training data is essential for the success of a machine learning model.

Model: A model is the output of a machine learning algorithm after it has processed training data. The model represents learned patterns and is used to make predictions or decisions on new, unseen data.

Algorithms: The algorithm is the mathematical procedure or process used by the machine to learn from data. Examples of popular machine learning algorithms include linear regression, decision trees, and neural networks.

Features: Features are the individual variables or attributes that are used in the data to make predictions. For example, in a dataset predicting house prices, features might include the number of bedrooms, square footage, and location.

Labels: In supervised learning, labels are the known outcomes or results that the machine learning model is trained to predict. For example, in a classification task, labels could represent categories like "spam" or "not spam" for an email classification model.

Types of Machine Learning

Machine learning can be categorized into three primary types, each with its unique approach to learning and problem-solving:

1. Supervised Learning

In supervised learning, the algorithm learns from labeled data, where both the input data and the correct output are provided. The goal is for the model to predict the output for new data based on the patterns it learned during training. Common algorithms used in supervised learning include:

Linear Regression: Used for predicting continuous outcomes, like stock prices.

Logistic Regression: Used for binary classification tasks, such as spam detection.

Support Vector Machines (SVM): Used for classification tasks with high-dimensional data.

2. Unsupervised Learning

Unsupervised learning involves training a model on unlabeled data, where the algorithm must identify patterns or structures within the data on its own. This type of learning is often used for tasks such as clustering and association. Common algorithms in unsupervised learning include:

K-Means Clustering: Used to group similar data points into clusters.

Principal Component Analysis (PCA): Used for dimensionality reduction by identifying the most important features of the data.

3. Reinforcement Learning

Reinforcement learning is a type of machine learning where an agent learns by interacting with its environment and receiving feedback in the form of rewards or penalties. The goal is to maximize cumulative rewards by taking the best actions based on past experiences. Common algorithms include:

Q-Learning: A model-free reinforcement learning algorithm used to learn the value of actions in a given state.

Deep Q-Networks (DQN): An advanced reinforcement learning algorithm using deep neural networks to approximate Q-values.

Machine Learning Applications

The applications of Machine Learning are vast and have transformed many industries. Here are some key areas where machine learning is making a significant impact:

1. Healthcare

In healthcare, machine learning is used for a wide range of applications, including medical image analysis, disease diagnosis, and personalized treatment plans. For example, machine learning models can analyze medical images such as X-rays and MRIs to detect abnormalities like tumors or fractures. Additionally, predictive models are being used to forecast disease outbreaks and patient outcomes based on historical data.

2. Finance

Machine learning has revolutionized the finance sector by enabling automated trading, fraud detection, and credit scoring. For example, machine learning algorithms are used in algorithmic trading to make real-time decisions on buying and selling stocks based on market data. Similarly, credit card companies use machine learning models to detect fraudulent transactions by analyzing patterns in spending behavior.

3. Retail and E-Commerce

In the retail industry, machine learning is used for recommendation systems, demand forecasting, and customer segmentation. For instance, e-commerce platforms like Amazon and Netflix use machine learning to recommend products or movies based on user behavior and preferences. Additionally, retailers use machine learning to forecast demand and optimize inventory management.

4. Autonomous Vehicles

Machine learning is a crucial component of self-driving cars. Autonomous vehicles use machine learning algorithms to process data from sensors and cameras, enabling them to recognize objects, pedestrians, and road signs, make decisions about navigation, and adapt to changing traffic conditions.

5. Natural Language Processing (NLP)

Machine learning plays a central role in NLP, enabling machines to understand, interpret, and generate human language. Applications of NLP powered by machine learning include virtual assistants (such as Siri and Alexa), language translation, sentiment analysis, and chatbots.

Benefits of Machine Learning

Automation of Repetitive Tasks: Machine learning algorithms can automate complex and repetitive tasks, increasing efficiency and reducing the need for manual intervention.

Improved Decision-Making: Machine learning helps businesses and organizations make data-driven decisions by uncovering hidden patterns and trends within vast amounts of data.

Personalization: Machine learning allows for personalized experiences in areas such as retail, entertainment, and marketing by tailoring recommendations to individual preferences and behaviors.

Scalability: Machine learning models can handle large volumes of data, making them highly scalable for applications such as predictive analytics and big data processing.

Challenges of Machine Learning

Despite its many benefits, machine learning also comes with some challenges:

Data Quality and Availability: Machine learning models require large volumes of high-quality data for training. Inaccurate or incomplete data can lead to poor model performance.

Bias in Data: If the data used to train machine learning models contains bias, the models may produce biased results. This is particularly concerning in sensitive areas like hiring, lending, and criminal justice.

Interpretability: Many machine learning models, especially deep learning models, operate as "black boxes," making it difficult to understand how decisions are made. This lack of transparency can be a significant issue in high-stakes applications.

Overfitting: Overfitting occurs when a model learns to perform exceptionally well on training data but fails to generalize to new, unseen data. Proper regularization techniques are required to mitigate this problem.

The Future of Machine Learning

As the field of machine learning continues to evolve, researchers are exploring new algorithms and techniques to make machine learning models more accurate, interpretable, and accessible. The integration of deep learning and reinforcement learning with traditional machine learning methods is expected to drive further advancements in AI. Additionally, as the availability of data and computational power increases, machine learning is likely to become even more pervasive across various industries.

What is Deep Learning? Exploring the World of Neural Networks and AI

Deep Learning is a subset of machine learning that focuses on using neural networks with many layers to analyze various data types. Unlike traditional machine learning models, which may only consist of a few layers, deep learning algorithms consist of multiple layers of interconnected neurons that simulate the workings of the human brain. These neural networks are capable of learning from large volumes of data, enabling them to recognize patterns, make predictions, and improve their performance over time.

Key Concepts of Deep Learning

To understand Deep Learning, it's essential to grasp some fundamental concepts that underpin this technology:

Neural Networks: At the core of deep learning is the neural network, a computational model inspired by the human brain. Neural networks consist of layers of nodes (also known as neurons), where each node processes a small piece of data and passes it on to the next layer.

Layers: Deep learning networks are distinguished by their deep architecture, meaning they have multiple layers between the input and output. These layers are typically categorized into:

Input Layer: Where the raw data enters the model.

Hidden Layers: Layers between the input and output that process the data and detect patterns.

Output Layer: The final layer that makes predictions or classifications based on the processed data.

Activation Functions: Activation functions are mathematical equations that determine whether a neuron should be activated (i.e., transmit data) or not. Common activation functions include ReLU (Rectified Linear Unit) and Sigmoid.

Backpropagation: Backpropagation is an essential process in deep learning where the model adjusts its weights after every prediction to minimize errors. It ensures the model improves with each iteration and leads to more accurate predictions.

Types of Deep Learning Models

Several types of deep learning models are used for different tasks, depending on the nature of the problem being solved. Some of the most common types of deep learning models include:

1. Convolutional Neural Networks (CNNs)

CNNs are primarily used for image and video recognition tasks. They excel at analyzing visual data by automatically detecting patterns, such as edges, textures, and shapes, at various levels of abstraction. CNNs are commonly used in applications like facial recognition, object detection, and medical image analysis.

2. Recurrent Neural Networks (RNNs)

RNNs are designed to handle sequential data, making them ideal for tasks such as time series prediction, speech recognition, and natural language processing (NLP). RNNs have a feedback loop that allows them to retain information about previous inputs, which is crucial for tasks that involve sequences, such as language translation or speech-to-text systems.

3. Generative Adversarial Networks (GANs)

GANs are used to generate new, synthetic data that resembles real data. They consist of two neural networks – a generator and a discriminator – that work together to produce data like images, videos, or music. GANs have been widely used in applications such as image generation, art creation, and deepfake technology.

4. Autoencoders

Autoencoders are used for unsupervised learning tasks, primarily for data compression and feature extraction. They work by encoding input data into a compressed format and then decoding it back to reconstruct the original input. Autoencoders are useful for anomaly detection and reducing the dimensionality of large datasets.

Applications of Deep Learning

Deep learning has a broad range of applications across various industries, transforming the way tasks are performed. Here are some of the most notable areas where deep learning is making an impact:

1. Healthcare

In healthcare, deep learning is revolutionizing diagnostics, medical imaging, and drug discovery. Algorithms powered by deep learning can analyze medical images, such as X-rays and MRIs, to detect diseases like cancer or identify abnormalities. Additionally, deep learning models are being used in genomics to analyze genetic data and discover new potential treatments.

2. Autonomous Vehicles

Deep learning plays a crucial role in the development of self-driving cars. Through deep learning models, autonomous vehicles can analyze and interpret real-time data from sensors and cameras, enabling them to recognize objects, pedestrians, road signs, and make navigation decisions. These models are crucial for ensuring the safety and efficiency of self-driving cars.

3. Natural Language Processing (NLP)

Deep learning has greatly advanced the field of natural language processing (NLP), allowing machines to understand, interpret, and generate human language. Applications of deep learning in NLP include language translation, chatbots, speech recognition, and sentiment analysis. For instance, Google's BERT model uses deep learning for more accurate language understanding.

4. Finance

In the finance sector, deep learning is used for tasks such as fraud detection, stock market prediction, and credit scoring. By analyzing vast amounts of historical financial data, deep learning models can identify patterns that humans might overlook, helping financial institutions make better decisions and improve security.

5. Entertainment and Media

Deep learning is also transforming the entertainment industry. Recommendation systems, such as those used by Netflix and Spotify, rely on deep learning to predict user preferences based on past behavior. Moreover, deep learning has applications in video editing, voice synthesis, and even game development, enabling the creation of realistic virtual environments.

Advantages of Deep Learning

Automated Feature Extraction: One of the biggest advantages of deep learning is its ability to automatically extract features from raw data, eliminating the need for manual feature engineering. This makes deep learning particularly powerful when dealing with complex, high-dimensional data.

Accuracy and Efficiency: Deep learning models often outperform traditional machine learning models in terms of accuracy, especially in tasks such as image classification, speech recognition, and natural language understanding. As more data becomes available, deep learning models can continue to improve their performance.

Handling Complex Data: Deep learning is well-suited to handle unstructured data, such as images, audio, and text. These models can process large datasets, enabling more sophisticated analyses and predictions.

Adaptability: Deep learning models are highly adaptable and can be fine-tuned to meet the specific needs of different industries and tasks. As new data becomes available, deep learning models can be retrained to improve their accuracy and generalization.

Challenges of Deep Learning

Despite its numerous advantages, deep learning presents some challenges:

Data and Computational Requirements: Deep learning models require large amounts of labeled data and significant computational power to train. This can be a limitation for organizations with limited resources or access to high-quality data.

Interpretability: Deep learning models, especially deep neural networks, are often described as "black boxes" because it is difficult to interpret how they make decisions. This lack of transparency can be problematic in fields where explainability is crucial, such as healthcare or finance.

Overfitting: Deep learning models are prone to overfitting, especially when there is not enough data or if the model is too complex. Regularization techniques are necessary to ensure that the model generalizes well to unseen data.

Ethical Concerns: The use of deep learning in areas such as facial recognition and surveillance has raised ethical concerns, particularly regarding privacy and potential misuse. Ensuring that deep learning technologies are used responsibly is essential.

The Future of Deep Learning

The future of deep learning looks promising, with advancements in hardware, algorithms, and data collection enabling further improvements in the technology. Researchers are continually developing more efficient models that require less data and computation, making deep learning more accessible and applicable to a wider range of industries. As deep learning continues to evolve, it will likely lead to even more innovative applications in AI and machine learning.

What are Expert Systems? Understanding Logic and Rule-Based AI

Expert systems are a branch of artificial intelligence (AI) that emulate the decision-making abilities of human experts. These systems use a set of pre-defined rules and logic to solve specific problems or make decisions in complex domains. Unlike other AI systems that may learn from data or adapt over time, expert systems rely on the knowledge explicitly programmed into them to make decisions or provide solutions.

Key Concepts of Expert Systems

Expert systems are built on several core concepts that distinguish them from other AI systems:

Knowledge Base: The knowledge base is the heart of an expert system. It contains the rules and facts about the domain of expertise. These rules are typically expressed as "if-then" statements, where certain conditions (if) lead to specific conclusions or actions (then).

Inference Engine: The inference engine is responsible for applying the rules from the knowledge base to the input data and generating conclusions or solutions. It processes the facts and rules to make logical deductions and decisions.

User Interface: The user interface allows the user to interact with the expert system. It may involve querying the system for information or receiving recommendations or solutions from the system. The user interface is often designed to be user-friendly to allow non-experts to benefit from the system's expertise.

Explanation System: Many expert systems include an explanation feature that provides users with a rationale for the conclusions or decisions made by the system. This is important for transparency and helps users understand the reasoning behind the system’s output.

How Expert Systems Work

Expert systems work by using a structured process to analyze input data, apply the rules from the knowledge base, and generate outputs. Here’s a step-by-step breakdown of how an expert system typically functions:

Input Data: The user provides input data or queries to the system. This could be information about a specific problem, situation, or condition.

Rule Application: The system’s inference engine compares the input data with the rules in the knowledge base. It uses logical reasoning to determine which rules are applicable and how they should be applied.

Inference: The system applies the rules and makes deductions. It may also use a process called forward chaining (starting from known facts to reach a conclusion) or backward chaining (starting from a goal and working backward to find the facts needed to achieve that goal).

Conclusion or Solution: The system generates a conclusion or solution based on the rules and input data. This could be a diagnosis, recommendation, or decision.

User Feedback: If the system provides a recommendation or solution, the user may provide feedback or input for further refinement. In some cases, the system may ask follow-up questions to clarify information.

Types of Expert Systems

Expert systems can be categorized into different types based on their approach to reasoning and their domain of application. The two main types are:

1. Rule-Based Expert Systems

Rule-based expert systems use a collection of "if-then" rules to make decisions. These rules are based on the knowledge of human experts in the specific field. When certain conditions are met, the system applies the corresponding rule to generate a conclusion. Rule-based expert systems are commonly used in fields like medical diagnosis, legal reasoning, and troubleshooting.

2. Frame-Based Expert Systems

Frame-based expert systems represent knowledge in structures known as frames. A frame is similar to a database record and consists of various attributes and values. These systems are useful for representing complex concepts and relationships between different pieces of knowledge. Frame-based expert systems are often used in more structured domains, such as engineering or architecture.

3. Hybrid Expert Systems

Hybrid expert systems combine rule-based reasoning with other AI techniques, such as machine learning or neural networks. These systems aim to leverage the strengths of multiple approaches to solve complex problems that may not be adequately addressed by a single method. Hybrid systems are often used in fields like robotics and advanced manufacturing.

Applications of Expert Systems

Expert systems have been widely used across various industries and domains to provide decision support and expert-level solutions. Here are some of the most common applications of expert systems:

1. Healthcare and Medical Diagnosis

One of the most prominent applications of expert systems is in healthcare and medical diagnosis. Expert systems, such as MYCIN (an early medical expert system), were developed to assist doctors in diagnosing diseases and recommending treatments based on symptoms and patient history. These systems analyze patient data and match it with rules in the knowledge base to suggest possible diagnoses or treatments.

2. Finance and Banking

In the finance industry, expert systems are used for credit scoring, fraud detection, and financial planning. By analyzing historical data, expert systems can assess the risk associated with loan applicants or identify suspicious patterns in transactions that may indicate fraudulent activity.